It seems like wherever you look, voice control and dictation are getting added to every app, operating system and game console. We like to make fun of how badly it works, but I decided to dive in headfirst to see what it’s like to actually use it… for everything. Here’s what happened.

Why I Bothered With Dictation In the First Place

Like any true sci-fi nerd, I’ve always been intrigued by voice control and dictation. It looks so cool in the movies, and it’s getting closer every day. Whether we like it or not, we’ll probably be controlling our computers and phones with our voices a lot more in the coming years.

Dictation has a certain romantic quality to it; it’s the modern equivalent of muttering your thoughts into a tape recorder, with the added benefit of writing down what you say, as you say it. For someone who types all day long, this sounds awesome. Maybe I could write while walking. Or, let’s be honest, maybe I could write without ever getting out of bed or even sitting up. (That’s the dream, right?)

Even though I had low expectations for how this was going to go, the potential whimsy of enjoying talking to my electronic devices won out. Would I look and sound silly? Yes. Would I annoy my friends by responding to text messages in public places by talking to my phone? Yes. But it all seemed worth it because I might fall in love with this, and that made it worth trying out.

Day 1: Learning the Ropes

You’d think, from watching countless sci-fi movies, that voice control is rather intuitive. As I quickly learnt on my first day, it’s not. I started by just attempting to write a few blog posts using dictation. Here’s an excerpt from my first attempt, just to give you an idea of how poorly I was grasping how to use this:

,DeleteBackspaceTalkHaveJessica how does you’re talking to my phone sounds good

As should be pretty clear, the first thing that happened was an incorrect word, which I then attempted to delete. After that, I just ranted at my computer for a little while. OK, so this is going to take a little more research on my part to get the hang of the whole thing.

Thankfully, we have a guide for doing just that. So, I pulled my microphone over, learnt the basics of formatting (saying “comma” and “period”), and got back to it. I tackled a pretty easy little post first. Here’s what initially came out:

This quote comes to us from our recent interview on Freakonomics with wired cofounder Kevin Kelly, and is a nice reminder that it will doesn’t need a specific definition.

OK, that’s a heck of a lot better than my first attempt, and it even got Freakonomics right. I did still need to pop back in and capitalise Wired, change “it will” to “tool”, and add a hyphen to co-founder, but it was certainly better than before. The microphone combined with a basic understanding of punctuation commands clearly helps makes things more readable.

However, what tripped me up at this point isn’t the text-to-speech mishaps, it was the actual act of speaking what I wanted typed. This isn’t as intuitive as I thought it would be, and it turns out I take a lot of long pauses while I think of what I want typed next. Writing on a keyboard allows you plenty of time to sit and ruminate on your next sentence, while dictation and speaking makes you want to move a lot quicker. It took me a little while for my brain to adjust to this.

It’s worth pointing out here that using dictation on my iPhone for short replies to text messages and emails was going a lot smoother. Because of the succinct nature of text conversations, using dictation on my phone was a lot easier and something I enjoyed doing, despite the obnoxious nature of doing it in public.

Day 2: Customising and Learning to Control My Computer

As my second day of using dictation rolled around, I realised I needed to dig in a bit more if I was going to make this useful. That means digging into actual voice commands instead of just using dictation.

It’s more than just dictating what you want to say, it’s also about doing a bit of on-the-fly editing. On a Mac, it turns out you have to enable dictation commands if you want full control:

- Open up System Preferences

- Head over to Accessibility

- Choose Dictation

- Click the Dictation Commands button

- Click the “Enable advanced commands” checkbox

With the advanced commands button checked, I can control my computer, open apps and edit text. It’s here where I learnt my mistake from day 1. To delete an errant word, the command isn’t just “delete”, it’s “delete that”. Now I can edit with commands such as “cut that”, “copy that”, “undo that” and “capitalise that”. If you’re ever unsure of what to say to trigger an action, you can always say “show commands” to get a pop-up of available commands.

Windows users have their own set of commands, but they’re pretty similar overall, although you can just say “delete” instead of “delete that”. I don’t have any experience with the Windows voice control, but turning on the commands is really simple:

- Open Speech Recognition by clicking the Start button > All Programs > Accessories > Ease of Access

- Click on Windows Speech Recognition

- Say “start listening” or click the microphone button

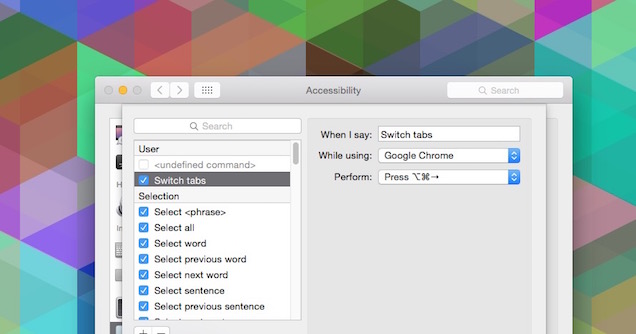

The advanced dictation commands on Mac also allow you to control apps, too. You can use commands like, “switch to [application name]”, “open document” and “click [item name]” to control whatever you want. No command listed for an action you want to perform? In that same System Preferences menu, click the “+” button to add your own. Just type in the phrase you want to trigger an action, pick the application you want to control, then pick the action that will be performed. Personally, I stuck with keyboard shortcuts.

For example, being able to say, “switch tabs” in Chrome by adding the Command+Option+Arrow shortcut completely changed how I was using voice commands. If you really want to get advanced with it, you can also trigger Automator actions, though my basic usage didn’t really require me to go that far.

The same goes for Siri. With Siri, it’s about knowing the language you can and can’t use. To its credit though, Siri (and Google Now) are way more intuitive than using voice commands on a desktop computer. Controlling everything on your phone with your voice requires almost no effort and once you get the hang of looking like a weirdo, it’s kind of delightful to use. It’s only day 2 and I’m already lazing around too concerned with comfort to take the effort to use both hands to text message someone. It’s truly a sad sight, but I honestly don’t care.

Day 5: Finally Getting Comfortable

Day 4 passes by with little insight, but by the fifth day I’m finally starting to find my groove. I’m able to not only do my job, but do it somewhat effectively.

At this point, I’ve set up voice commands for nearly everything I need. I can switch tabs, switch windows, launch apps, control specific functions in apps (for example, saying “next” to move between RSS stories in Reeder), and carry on with the bulk of day without ever touching the keyboard or mouse. It’s kind of cool, although I can feel my voice growing a little hoarse.

The dictation itself it also starting to click better. It’s a complete rewiring of the brain, where you once communicated by typing, you’re now doing so by speaking, so it takes a while to get the hang of it. Where the first couple of days produced very bland, simple sentences, I’m getting better about including my “voice” in what I’m saying. You’d think it’d be the opposite, where speaking would bring more of your personality to the table, but that took training for me. I simply don’t talk like I type. Plus, I get to pace around a lot while I dictate, which suits me surprisingly well.

As an aside though, I should also note that dictation commands were seeping into my real life. In at least once conversation with a real person in real life, I said “comma” out loud. I’m sure this was mostly due to the full immersion I was doing in researching this post, but it’s still worth mentioning. Thankfully, my faux pas was met with a healthy guffaw by everyone who heard it.

Day 7: Acceptance and the Return of the Keyboard

As I finish off my week, I’ve gotten the hang of both voice dictation and voice control. Both have their uses, but I’ve since returned to the keyboard and mouse.

In basically every article where dictation is mentioned, people like to point out that the article was written entirely with dictation. Often, there are funny little errors throughout, a lack of punctuation or a few odd wordings. I wrote this post entirely with dictation. But I also edited it with dictation. When I was done with that, I edited it using a keyboard and mouse. Then I sent it over to have other people edit it too. Unless you physically can’t type, dictation is just a tool. It’s not an end-all for writing. You still have to edit after you speak (after all, that’s part of the beauty of writing).

As for voice control, it’s fun for a couple days, then gets a little annoying. For me, using keyboard shortcuts is faster. Navigating with your voice ends up being more obnoxious than it is useful, but at least you can eat Cheetos without messing up your mouse. Typing is also easier because my brain’s been fully wired to do just that. Sadly, I can type far better than I can speak, and even after a week of using dictation, the improvement there was minimal. It was a fun experiment, but I feel like the effort that it would take to calibrate my mind for dictation instead of typing isn’t worth it the long run. Sure, I can lazily type articles while also laying on the floor (or conversely while standing without owning a standing desk), but I should probably be sitting upright in a chair and typing for now.

However, I can see this being useful in a lot of moments. Dictation and voice control are handy if you have a computer set up as a media centre. They’re also useful if you’re the type who likes to pace around to get ideas out. Just don’t expect to do everything here. I enjoyed my time using it, and for me, I imagine it will be useful while brainstorming up ideas or when I just need to get things out of my brain and onto paper without worrying too much about editing.

That said, I’m now a lot better at using Siri and use it constantly on my iPhone, albeit not during any social or public moments. It’s helpful pretty much anytime I can’t look at my phone, like when I’m walking, running, cycling or, y’know, too lazy to reach over and grab it off its dock. It makes a lot more sense on mobile too, because it’s a higher likelihood that you can’t reach for you phone then not being able to reach for your computer. The car is the most obvious time, but cooking, eating, or when you’re doing any other activity where your hands are tied all make it useful. It’s well worth the time to learn what you can do with Siri and Google Now, because if you don’t, you’ll never know when and how you can use them.

Comments

2 responses to “I Used Dictation And Voice Control For A Week. Here’s What Happened”

The most useful dictation I’ve found is talking to my watch to say set a time for X amount of time.. normally works pretty well if you can wake up the watch.

I’m fairly big on voice commands, which is why I’d love to bump my iPhone 5S up to a 6S: ‘Hey Siri’ functionality would be really useful when I’m not connected to power. I don’t use voice commands in normal situations, but I love using them for scenarios where I ought (or need) to be hands free, like having messages read out and replying to them while cycling, or setting a timer while I’m baking.

Not so big on dictation, though 😛